Visual Object Navigation on Loomo robot

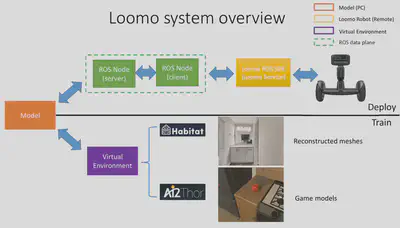

In this project, I built a framework to 1) train visual navigation models in virtual environment and 2) deploy model onto Loomo robot. The overview of this framework is visualized below:

The deployed end-to-end visual navigation model is modified from code provided by “Visual Semantic Navigation using Scene Priors (ICLR 2019)”.

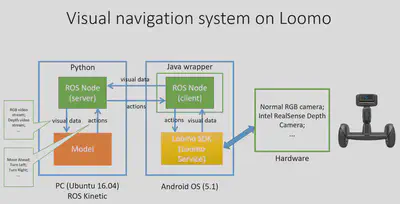

The Loomo robot serves as the front end to collect visual data and receive control commands from the model running on a standalone PC. The implementation details of the visual navigation system on the robot is given in the figure below: