|

|

|

|

|

|

|

|

|

|

|

|

|

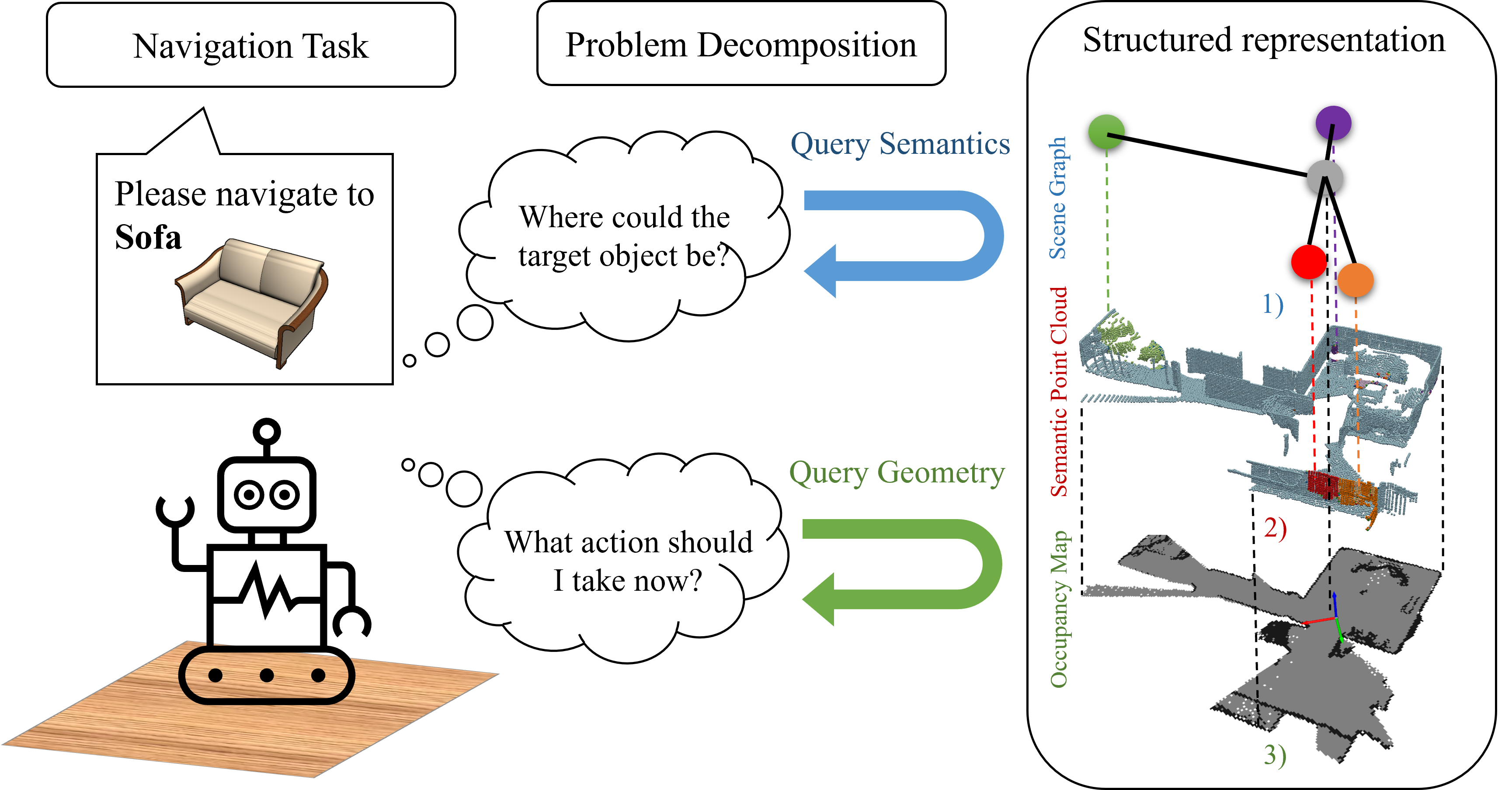

Summary: This work presnets a modular and training-free system to tackle the object goal

navigation problem.

The system builds a structured scene representation during active exploration, propagates semantics

in the scene graphs to infer the target location, and

introduces those semantics to the geometric frontiers. With semantic frontiers, the agent navigate

to the most promising areas to search for the goal object and avoid detours in unseen environments.

|

|

| The proposed method builds a structured scene representation on the fly, which consists of a semantic point cloud, a 2D occupancy map, and a spatial scene graph. Then the scene graph is used to propagate semantics to the geometric frontiers. With semantic frontiers, the agent can navigate to the most promising areas to search for the goal object and avoid detours in unseen environments. |

|

Junting Chen, Guohao Li, Suryansh Kumar, Bernard Ghanem, Fisher Yu. How To Not Train Your Dragon: Training-free Embodied Object Goal Navigation with Semantic Frontiers. In Conference Robotics: Science and Systems, 2023. (hosted on ArXiv) |

|

|

Acknowledgements |